Quantum computing, a revolutionary field harnessing the principles of quantum mechanics, promises to transform the landscape of computation. This beginner’s guide will demystify the core concepts of quantum computing, providing a foundational understanding of its potential and challenges. From qubits and superposition to entanglement and quantum algorithms, we’ll explore the key elements driving this cutting-edge technology. If you’re curious about the power of quantum computing and eager to learn more, this guide is your starting point.

This comprehensive introduction to quantum computing assumes no prior knowledge of the field. It will elucidate the fundamental differences between classical computing and quantum computing, explaining how quantum phenomena, like superposition and entanglement, enable quantum computers to tackle problems currently intractable for even the most powerful supercomputers. This beginner’s guide will also delve into the potential applications of quantum computing across diverse fields, including medicine, materials science, and cryptography, setting the stage for a deeper exploration of this transformative technology.

What Is Quantum Computing?

Quantum computing is a revolutionary type of computation that harnesses the principles of quantum mechanics to solve complex problems beyond the capabilities of classical computers. Unlike classical computers that store information as bits representing 0 or 1, quantum computers use qubits.

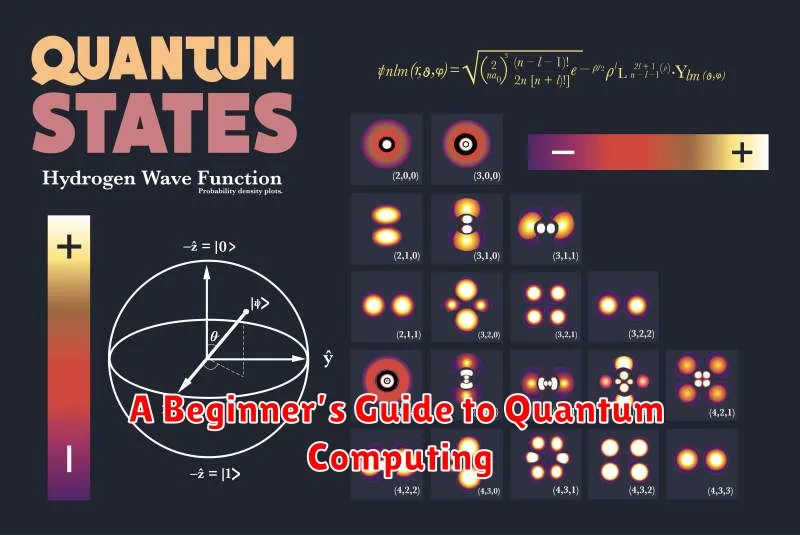

Qubits leverage quantum phenomena like superposition and entanglement. Superposition allows a qubit to exist in a combination of 0 and 1 simultaneously, vastly increasing computational power. Entanglement links two or more qubits together, so they share the same fate, even when separated. These properties allow quantum computers to explore multiple possibilities concurrently, offering the potential to tackle currently intractable problems.

Qubits vs Classical Bits

The fundamental difference between quantum computing and classical computing lies in how information is stored. Classical computers use bits, which can represent either 0 or 1. Quantum computers use qubits.

Qubits, through the principle of superposition, can represent 0, 1, or a combination of both simultaneously. This allows quantum computers to explore multiple possibilities at once.

Another key difference is entanglement. When qubits are entangled, they become linked, and the state of one instantly influences the state of the other, regardless of the distance separating them. This interconnectedness contributes to the power of quantum computations.

How Quantum Computers Work

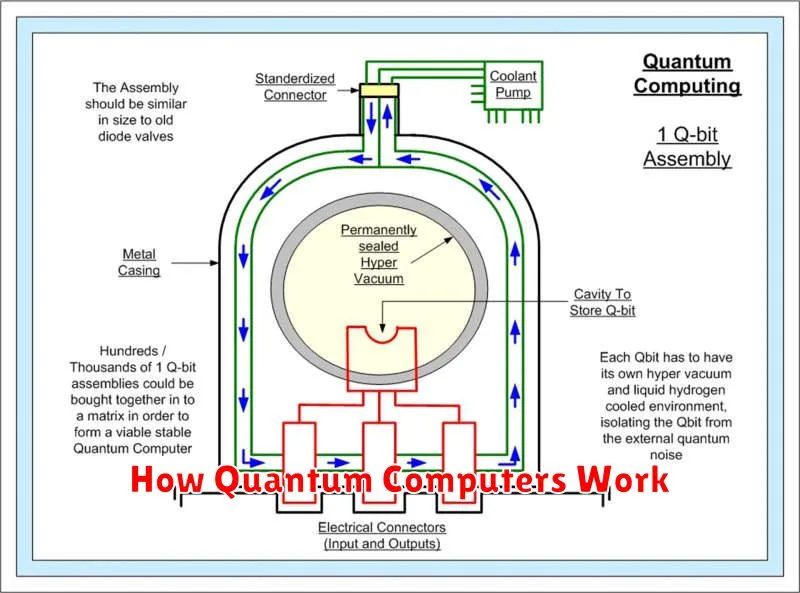

Quantum computers leverage the principles of quantum mechanics to perform powerful calculations. Unlike classical computers that store information as bits representing 0 or 1, quantum computers use qubits.

Qubits can represent 0, 1, or a combination of both through a concept called superposition. This allows quantum computers to explore multiple possibilities simultaneously.

Another key principle is entanglement, where two or more qubits become linked, and the state of one instantly influences the others, regardless of the distance separating them. This interconnectedness enables complex computations.

Through quantum gates, analogous to logic gates in classical computing, qubits are manipulated and their states altered to perform algorithms. These algorithms exploit the unique properties of quantum mechanics to solve certain types of problems significantly faster than classical algorithms.

Current Challenges and Limitations

Despite the immense potential, quantum computing faces significant hurdles. Qubit stability is a major challenge. Qubits are incredibly fragile and prone to decoherence, losing their quantum properties quickly. This makes performing long and complex computations difficult.

Scalability is another key limitation. Building and maintaining systems with a large number of qubits is technologically demanding and expensive. Current quantum computers have a limited number of qubits, restricting the complexity of algorithms they can execute.

Error rates are significantly higher in quantum computations compared to classical computing. Developing effective error correction techniques is crucial for building fault-tolerant quantum computers.

Potential Use Cases

While still in its nascent stages, quantum computing holds immense potential to revolutionize various fields. One prominent area is drug discovery and development. Quantum computers can simulate molecular interactions with unprecedented accuracy, accelerating the identification of new drug candidates and optimizing existing ones.

Materials science is another field poised for disruption. Quantum simulations can predict the properties of new materials, leading to the development of lighter, stronger, and more efficient materials for various applications. Furthermore, quantum computing can enhance financial modeling by enabling more complex and accurate risk assessments, portfolio optimization, and fraud detection.

Cryptography is also significantly impacted by quantum computing. While quantum computers pose a threat to existing encryption methods, they also pave the way for new, quantum-resistant cryptographic techniques. Finally, artificial intelligence and machine learning algorithms can be significantly accelerated by quantum computing, leading to breakthroughs in areas such as image recognition, natural language processing, and robotics.

Quantum Computing in Everyday Life?

While quantum computing holds immense potential, its presence in everyday life is still limited. The technology is in its nascent stages, requiring highly specialized and expensive equipment. Most current quantum computers are accessed through cloud-based platforms by researchers and developers.

However, the impact of quantum computing is anticipated to grow significantly in the coming years. Fields like medicine discovery, materials science, and financial modeling are expected to benefit from quantum algorithms. As the technology matures and becomes more accessible, its integration into daily life, albeit indirectly, will become more apparent.